One of the first cool things you want to do with your kitchen computer is teaching it to talk. The visits come and you ask it to play music or open the roller shutters. Cool!

But current solutions come with a cost. Some people don’t care if their conversations are sent to servers at the other part of the world.

I want it full privacy preserving. Of course we have nothing to hide, but privacy is not about having nothing to hide

So this has to be full free and open source software.

There are some alternatives for FOSS voice assistants. I went for Mycroft, as it is well integrated with openHAB. It is a great working solution, backing FOSS up. They have recently even open sourced their server backend.

Setting Mycroft

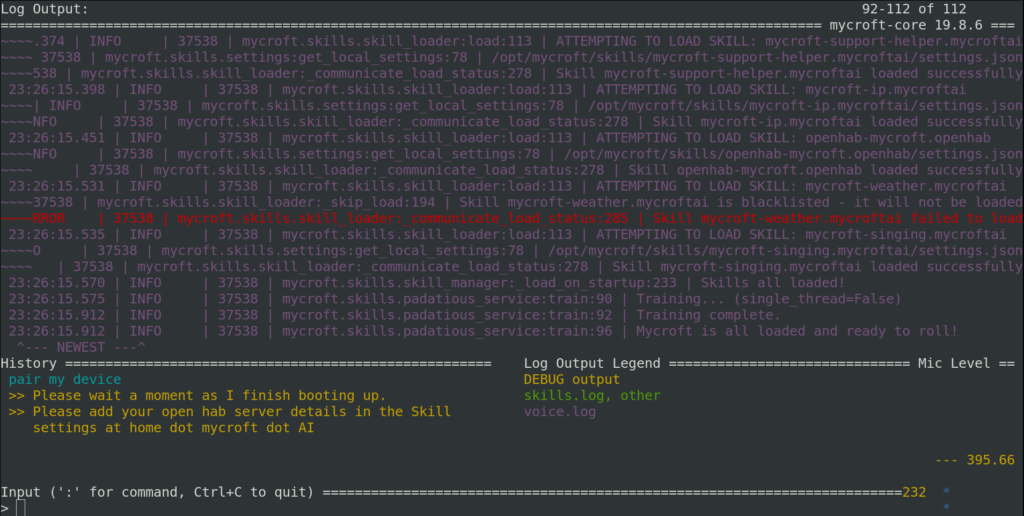

Mycroft core repository is very well documented. It has a getting started script that does all the stuff for you: installing required Debian packages, setting the Python virtual environment… it even git-pulls to get the last commits from the repository. In a few minutes you have a Mycroft instance up and running without further problems.

There is still tweaks needed for two things: running without using the Mycroft home and using your own speech2text service.

Running without Mycroft Home

Mycroft Home seems to be a nice service, but I don’t need all the goodies they offer. I just want a local configuration without any data sent to a remote server.

There are some options to setup your own backend, including Mycroft’s own backend or a simpler personal backend.

But you can even use Mycroft without home. Just blacklisting some skills (mycroft-pairing.mycroftai, mycroft-configuration.mycroftai), it prevents Mycroft for connecting to the home. Setting these options in the configuration file is just enough.

DeepSpeech: Run your own speech2text service

Mycroft is a great tool for listening to the microphone, waiting for the wake word and running a big set of skills: from saying hello to reading a Wikipedia entry. However, it still needs a speech2text service that recognizes your words.

There are a few options for speech2text, including, of course, using Google STT. But we exactly want an alternative to it.

Fortunately, people at Mycroft are collaborating with DeepSpeech, a nice project from the Mozilla Foundation based on Tensorflow. At Common Voice they are gathering the required data for training the model, and it is surprisingly easy how you can start contributing from minute one.

Setting DeepSpeech in Mycroft is quite straight forward using the DeepSpeech Server python package. You can easily set its own python environment, writing a configuration file and it will start listening to Mycroft’s wave audios in a local port.

Finally, you just configure Mycroft to use DeepSpeech

And here you are! Your full open source local server voice assistant working in your kitchen!

Next steps

In Spanish, please. Our mother tongue is Spanish, so we expect using home automation in that language. While there is a overwhelming set of resources in English, including DeepSpeech models, Spanish resources are not that ready to go. There are however posts about using transfer learning or even a published DeepSpeech Spanish model, so training our own model shouldn’t be very hard.

Changing the wake word. Hey Mycroft is not bad, but it would be awesome if you could use your own name. Mycroft people make it very easy, their Mycroft Precise repository provides us with a ready-to-train recurrent neural network and a pretty straightforward training your own wake word howto

Screensaver dashboard. Our touch screen speakers disconnect when the screen switches to sleep mode. This prevents us from listening to Mycroft responses. Besides, there is lag from when you touch the screen and it wakes up. I am fixing this writing a simple Gnome Extension that impedes the screen going to sleep at certain times or in certain conditions. It also will show the time, the weather, and icons with some shortcuts to most used programs.

More coming soon!